Over the last few months, we’ve been doing themed weeks on social media. We started with a week on agile, then user research and service design. This week was all about accessibility.

For each one, we’ve done a live Periscope broadcast with an expert. These broadcasts have been very popular, but there’s a problem which we’ve known about and struggled with from the start: Periscope isn’t as accessible as we’d like it to be.

To date, we’ve worked around this by getting each broadcast transcribed as soon as possible after the event, then posting the transcription online. But we’ve always wondered if there was a better way to make the actual broadcast itself more accessible.

It’s complicated, but it works

The challenge was to get both the live transcription and the video visible in Periscope. This isn’t possible to do within the Periscope app, so we had find another way.

The solution we came up with involved a lot of wires and a lot of planning, but it worked.

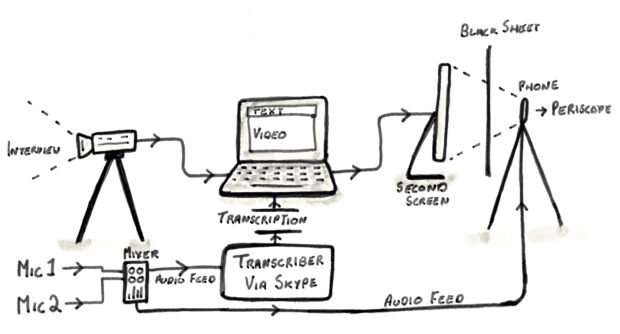

We combined the live footage and transcription on a laptop, then put both on a large second display screen, which we then filmed using the phone connected to Periscope.

The camera feed of the interview went directly into the same laptop, and we viewed this using Youtube’s live events streaming service. We didn’t stream it, though - we just used it as an easy way of displaying the live video within a browser window.

Meanwhile, we had to send live audio of the interview to a remote live transcriber. To do this, we fed the interview microphones into an audio recorder/mixer, and from that sent a stereo mix via USB to another laptop connected to the transcriber via Skype. A second audio feed went out from the mixer into the phone filming the screen.

The transcriber typed into a web form and the text was displayed in a browser window on the large display screen. On the display laptop, we were able to arrange two browser windows - one with the live video footage of our interview, and another above it with the live transcribed text.

We iterated to this

Getting to this solution involved several tests, which highlighted some other issues that we had to overcome. The first thing we noticed was reflections on the display screen. To overcome this, we filmed through a small hole in a black sheet. It looked a bit odd, and it’s not ideal, but it worked.

Another issue was the positioning of the two browser windows on the display screen. In early tests we started with the transcribed text at the bottom of the screen, below the video footage, but quickly realised that this would mean the text would be masked on Periscope by incoming comments from viewers. That’s why we moved the transcription text to the top of the screen, and just adjusted the framing of the interview to allow room in the shot.

What we learned from doing it once

Periscope is a terrific tool for live broadcasting via social media. We’d love to see it become more accessible by default, but the work we put into this broadcast shows just how difficult that will be. We hope the team at Periscope will at least give it some thought.

What we learned was that a bit of old-fashioned hacking about can achieve great results. The room we filmed in ended up becoming a mess of wires, tripods and light stands, and the set-up time was considerable. But it worked. (If anyone out there has suggestions for doing it more simply or in any other way, please get in touch by leaving a comment below, we’d love to hear them.)

Of course, making Periscope accessible for accessibility week isn’t enough. We want to make all our live broadcasts as accessible as possible. The main thing we learned this week was that in future, we’ll have to do the hard work to make that happen.

It was fun though. So we can’t wait for the next one.

Follow Mark and Graham on Twitter, and don’t forget to subscribe to the blog.

5 comments

Comment by Mairi Macleod posted on

Really, should you be using something that is so inaccessible? Periscope obviously never gave a thought to people with hearing impairments when it was developed, and they are not the only ones. All sorts of on-demand and online video is inaccessible because too many platforms have been developed in a way that is not initially compatible with subtitles. For an organisation like GDS, which is so fantastic at considering the needs of all users from the get-go, it's ironic to say the least that you would use something so at odds with your whole philosophy. Full marks for trying to work around its shortcomings, but what you did doesn't sound sustainable in the long term, lets Periscope off the hook, and probably wouldn't be feasible for many other users to replicate. I'm sorry I can't suggest an alternative for you - I don't know if any other live streaming apps are any better - but if you really think you have to use Periscope, you should use your good reputation to put pressure on them to make it accessible. For example, I believe the BBC did not give Chromecast access to the iPlayer till Google made Chromecast compatible for subtitles.

Comment by Suhail Adam posted on

Hi Mairi, thanks for the comment. We did evaluate other digital broadcasting services, including Meerkat. But we chose Periscope as it has a large user base and integrates well with Twitter. We do agree, Periscope is not great at accessibility and we will forward our feedback to them. Meanwhile, we will continue to look for other live broadcasting options that better meets the needs of our users.

Comment by Mairi Macleod posted on

http://blogs.wcode.org/2015/10/live-streaming-captions/

This is also a bit of a rigmarole, but sounds as though it might have fewer wires than your method, and certainly no black cloth?

Comment by Suhail Adam posted on

Hi Mairi, thanks for the feedback. We'll take a look at this to see what we can learn. Thank you. Suhail

Comment by Lyndon Borrow posted on

Hi fellas,

I was chatting with other guy about using live streaming or Periscope, and I've tried to encourage him to make it accessible for deaf / hard of hearing people. He mentioned that he was put off with potentially high cost and complicated set-up as he has seen your "Hacking Periscope for accessibility" blog earlier.

I though I do set this record straight here for everyone that it is not as expensive, and there is no need for extra wiring, equipment and set-up.

I work for British Deaf Association, and I do a lot of live streaming for deaf / hard of hearing people with subtitles and sign languages. You only need a smartphone on a tripod to live-stream the verbal speaking presenter sitting next to a sign language interpreter and have a monitor/TV screen stand in behind or wherever it fits - with text feed from STT / Palantypist on site (behind the camera) or remotely. Please see this sample photo I have whipped up via PhotoShop to give you an idea:

http://www.lyndonborrow.com/download/Periscope-Sample-GDS3.jpg

No extra equipment needed, set-up by one person only and it's dramatically cheaper. I wished I could have helped you guys earlier. Maybe next time!

Kind regards,

Lyndon